SANTA CLARA — NVIDIA is advancing the field of physical AI by introducing specialized computing platforms designed to bring intelligence to real-world industrial systems. While generative AI has already transformed digital sectors, physical AI—artificial intelligence embodied in physical systems like robots and automated factories—has been slower to take off. However, with NVIDIA’s new suite of tools for training, simulation, and deployment, the era of physical AI is rapidly approaching.

The Foundation of Physical AI Development

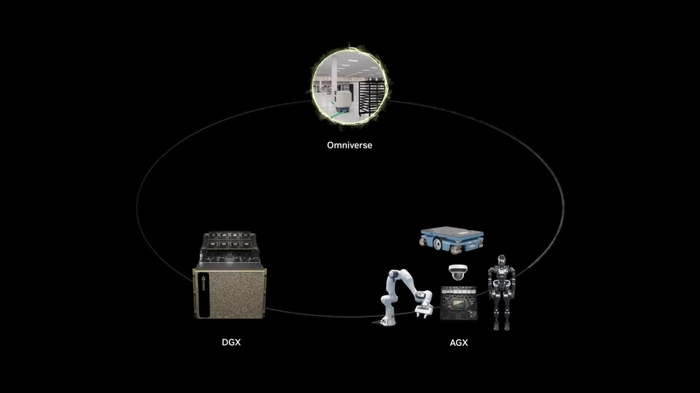

To accelerate the adoption of physical AI, NVIDIA has introduced three distinct computing platforms that collectively enable the development, testing, and deployment of intelligent robotic systems:

- Training

NVIDIA’s first platform focuses on the training phase, where models are developed using massive data sets and generative AI capabilities. NVIDIA NeMo on the DGX platform supports the training of foundational AI models that provide the basis for robots to understand language, learn from visual cues, and recognize complex patterns. Additionally, through Project GR00T, NVIDIA is working to create foundation models that teach humanoid robots to interpret human language and movements. - Simulation

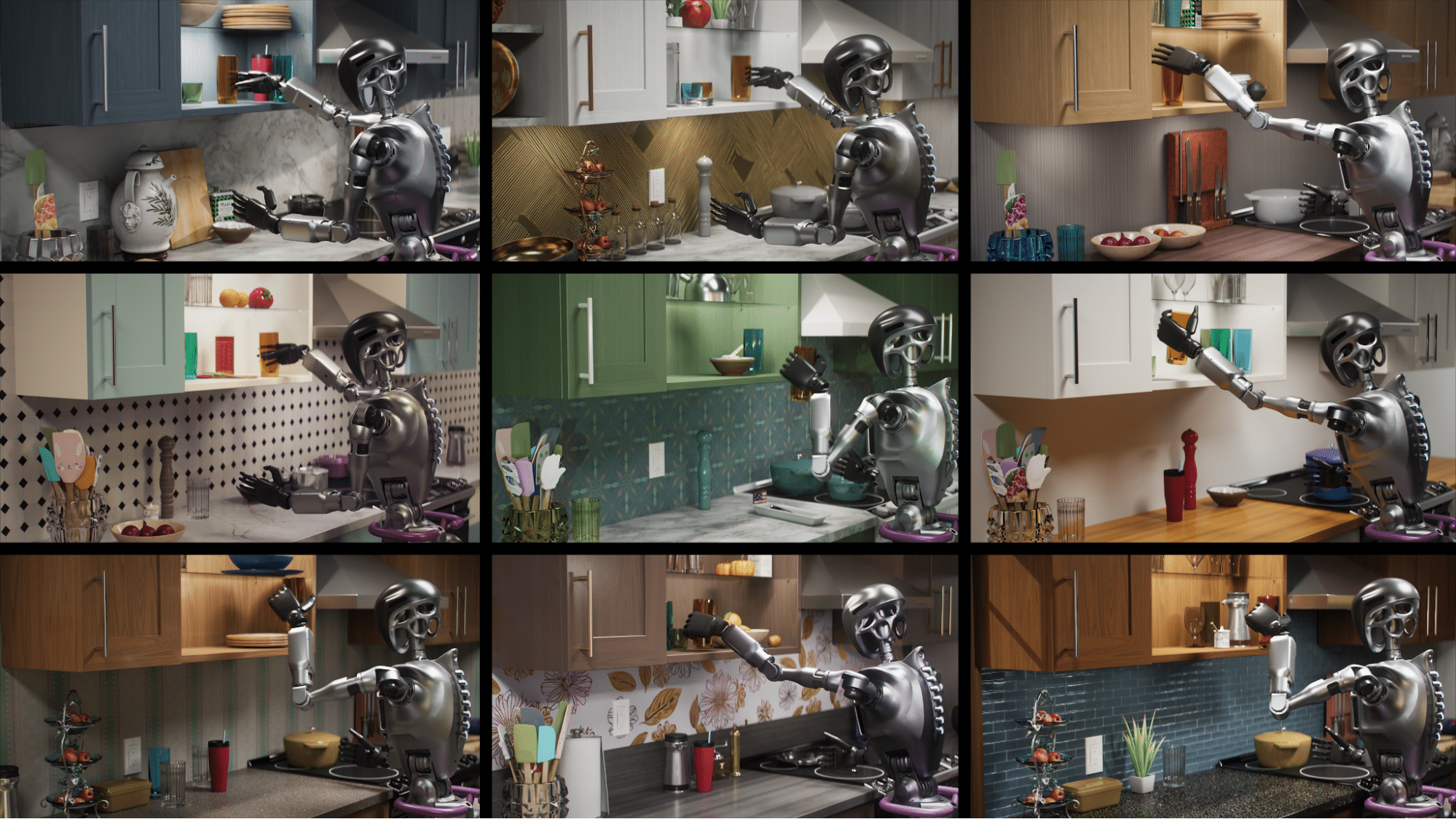

The second platform, powered by NVIDIA Omniverse on OVX servers, enables developers to simulate and refine physical AI models in realistic, physics-based virtual environments. With NVIDIA Isaac Sim, developers can test robots and AI models under diverse conditions without needing extensive real-world testing. This not only improves efficiency but also allows for the generation of synthetic data for training. Tools like Isaac Lab facilitate further experimentation, offering reinforcement and imitation learning to enhance robot performance. - Deployment

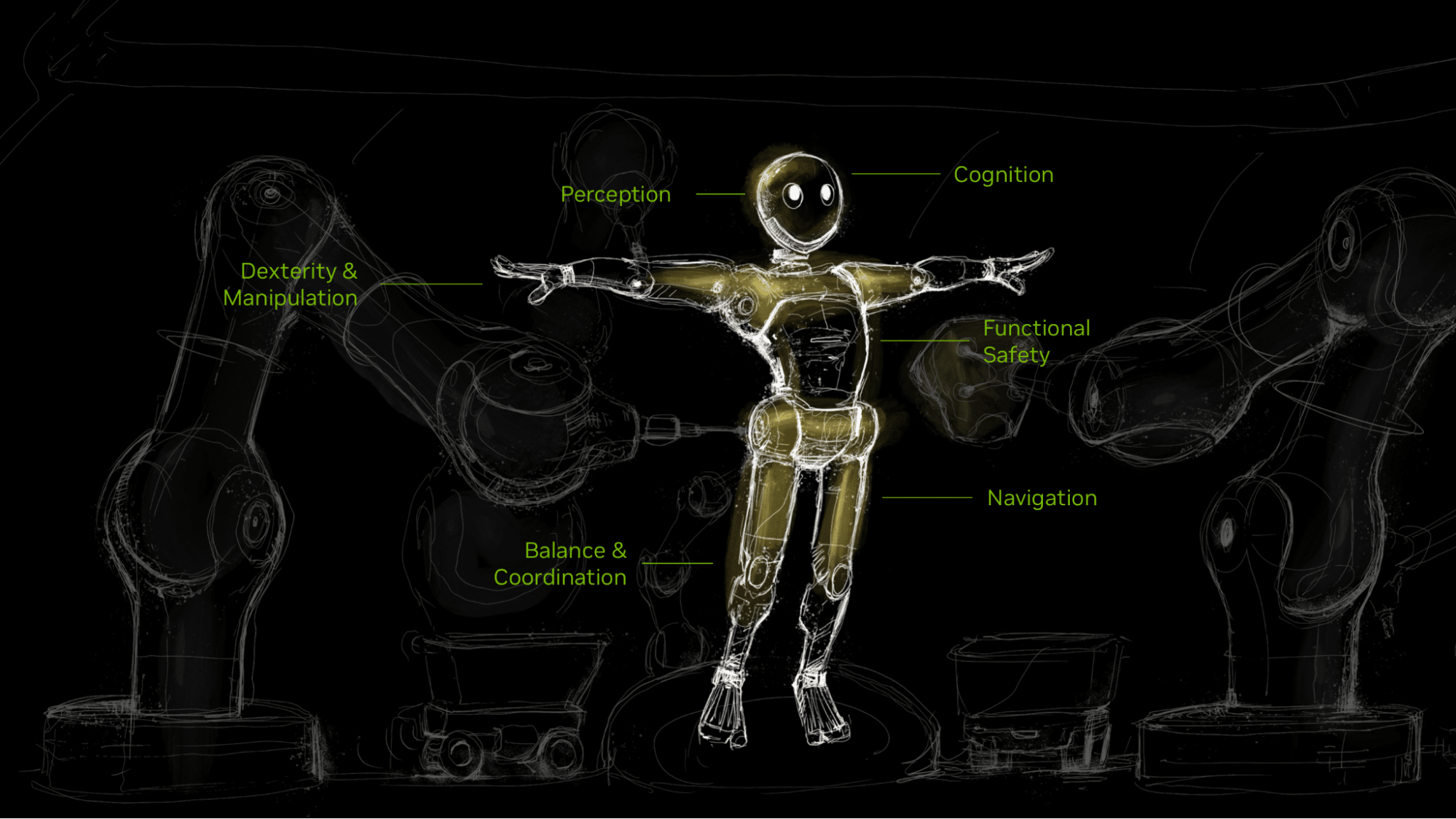

For the final stage, NVIDIA provides the NVIDIA Jetson Thor robotics computers, which are optimized for compact, efficient on-device computing. This edge computing solution enables AI models to operate on robots in real-time, powering their perception, control, and reasoning capabilities directly in the field. These components work together as the “robot brain,” allowing robots to sense and respond dynamically within their environments.

Applications in Industry: The Next Phase of Automation

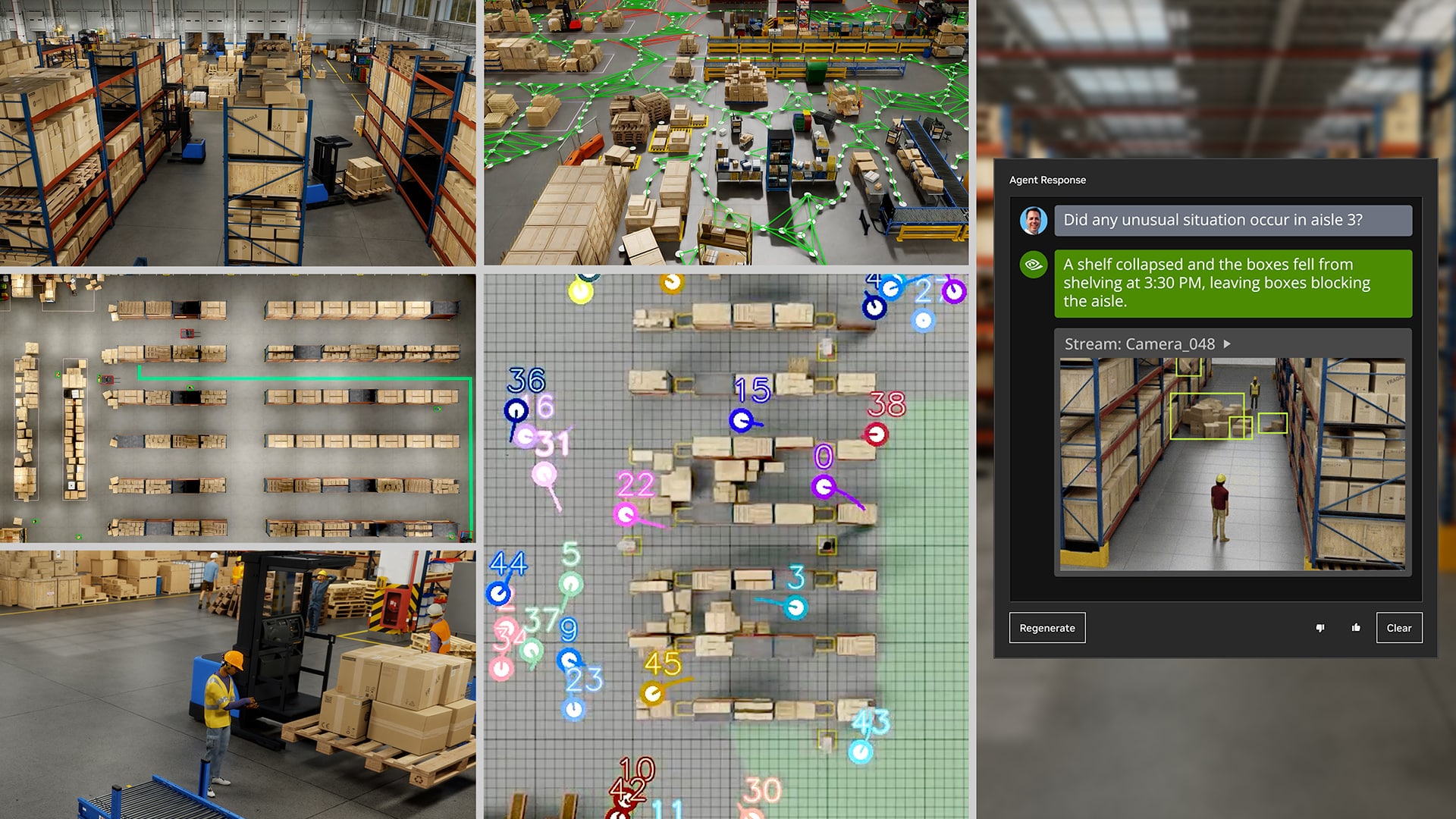

NVIDIA’s advancements in physical AI are expected to drive transformative changes across industries, from manufacturing and logistics to healthcare and urban planning. For example, autonomous factories and warehouses can leverage robotic arms, mobile robots, and digital twin technology to streamline operations, reduce labor costs, and adapt quickly to changes. Companies such as Foxconn and Amazon Robotics are already exploring these capabilities, using digital twins—virtual replicas of physical environments—to plan and optimize layouts, track robotic performance, and run software-in-the-loop tests before deploying real robots in live settings.

NVIDIA’s digital twin platform, “Mega,” allows developers to populate these digital replicas with simulated robots to test processes in virtual space. In this digital twin, robots execute tasks such as navigating around obstacles, handling objects, and interacting with other machines, all under simulated physical constraints. This simulation-based approach helps enterprises ensure that robotic fleets will perform seamlessly once deployed in real facilities.

Humanoid Robots: Preparing for a General-Purpose Future

Humanoid robots, designed to operate in human-oriented environments, represent a key area for physical AI. With projections estimating a $38 billion market by 2035, companies and researchers are investing heavily in developing robots that can assist in high-interaction environments such as healthcare, scientific research, and customer service. Using NVIDIA’s platforms, companies like Boston Dynamics and Agility Robotics are enhancing humanoid robot capabilities, allowing them to navigate, perceive, and perform tasks autonomously in dynamic environments.

A Transformational Step for Robotics Development

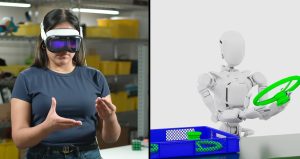

By enabling developers to simulate complex interactions, NVIDIA’s platforms support the training of robots that can perceive, interpret, and respond to a three-dimensional world. RGo Robotics, for example, uses NVIDIA’s Isaac platform to enable autonomous mobile robots to make context-aware decisions, navigating through varied and unpredictable spaces. For humanoid robot makers, the NVIDIA ecosystem offers the tools to advance perception and motor skills, allowing robots to perform tasks that would traditionally require human intuition and dexterity.

Shaping the Future of Physical AI

NVIDIA’s investment in physical AI is paving the way for a future where intelligent machines operate seamlessly across sectors, from smart cities to autonomous medical facilities. By creating the technological backbone for robots to think, move, and act independently, NVIDIA is not only advancing the robotics industry but also setting a foundation for entirely autonomous ecosystems that work alongside humans, improving productivity, safety, and sustainability on a global scale.

In summary, NVIDIA’s comprehensive approach to physical AI—covering training, simulation, and deployment—marks a significant leap toward the widespread adoption of intelligent robotics, ushering in a new era of autonomous industrial solutions and reshaping how the world approaches complex, real-world tasks.

Get started with NVIDIA Robotics.