MENLO PARK — Meta is proud to introduce Llama 3, the latest and most powerful generation of its large language model (LLM) suite. Available starting today, Llama 3 sets a new standard in open-source AI with two models featuring 8 billion and 70 billion parameters. These models promise enhanced performance across a wide array of use cases, from reasoning and creative writing to coding and summarization, and are optimized to meet real-world demands.

Llama 3 models will be available on platforms such as AWS, Google Cloud, Hugging Face, Microsoft Azure, Databricks, and others. Additionally, support from hardware providers like AMD, Dell, Intel, NVIDIA, and Qualcomm ensures that Llama 3 can be deployed at scale across various ecosystems.

A Leap Forward in AI Performance

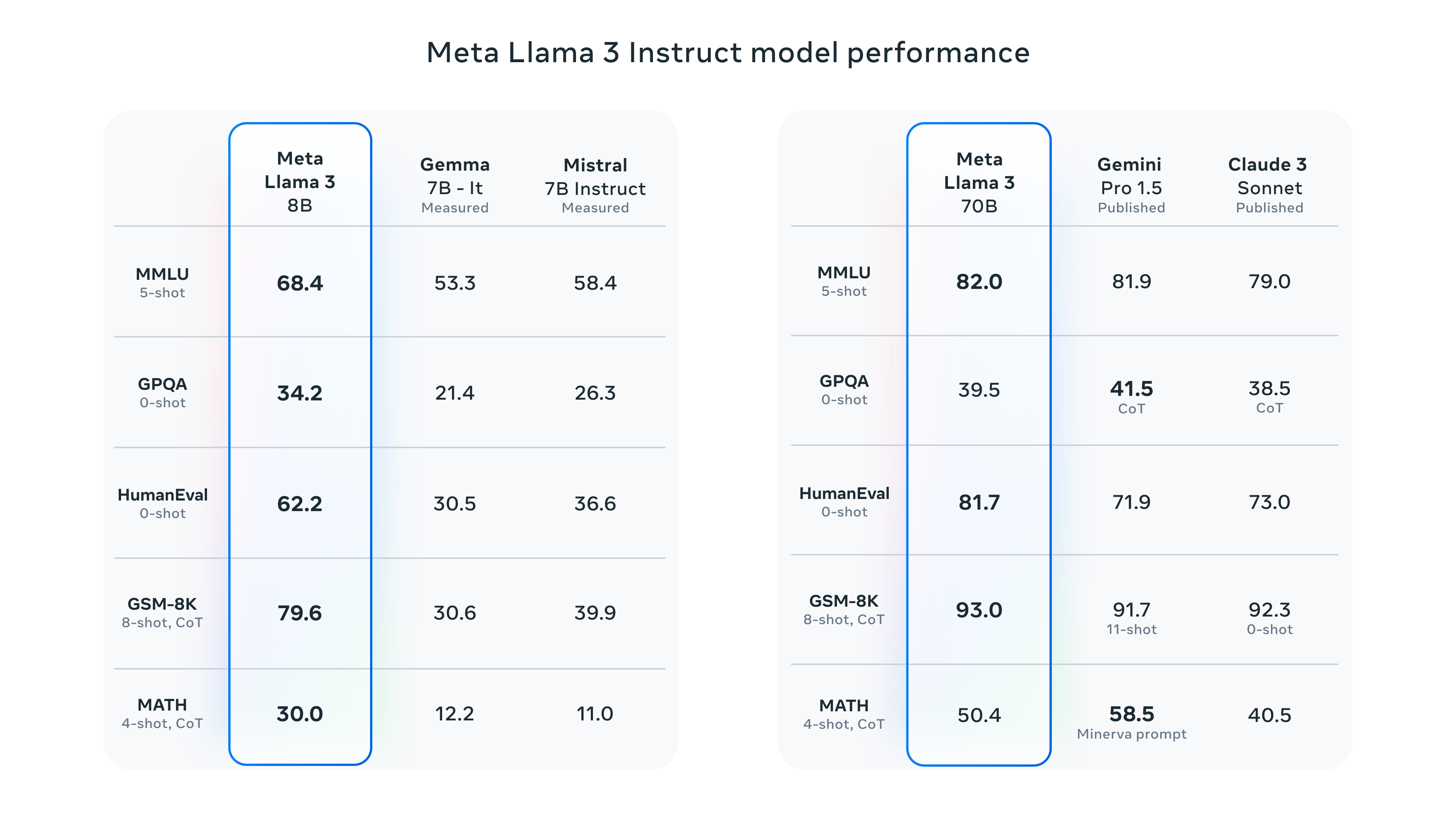

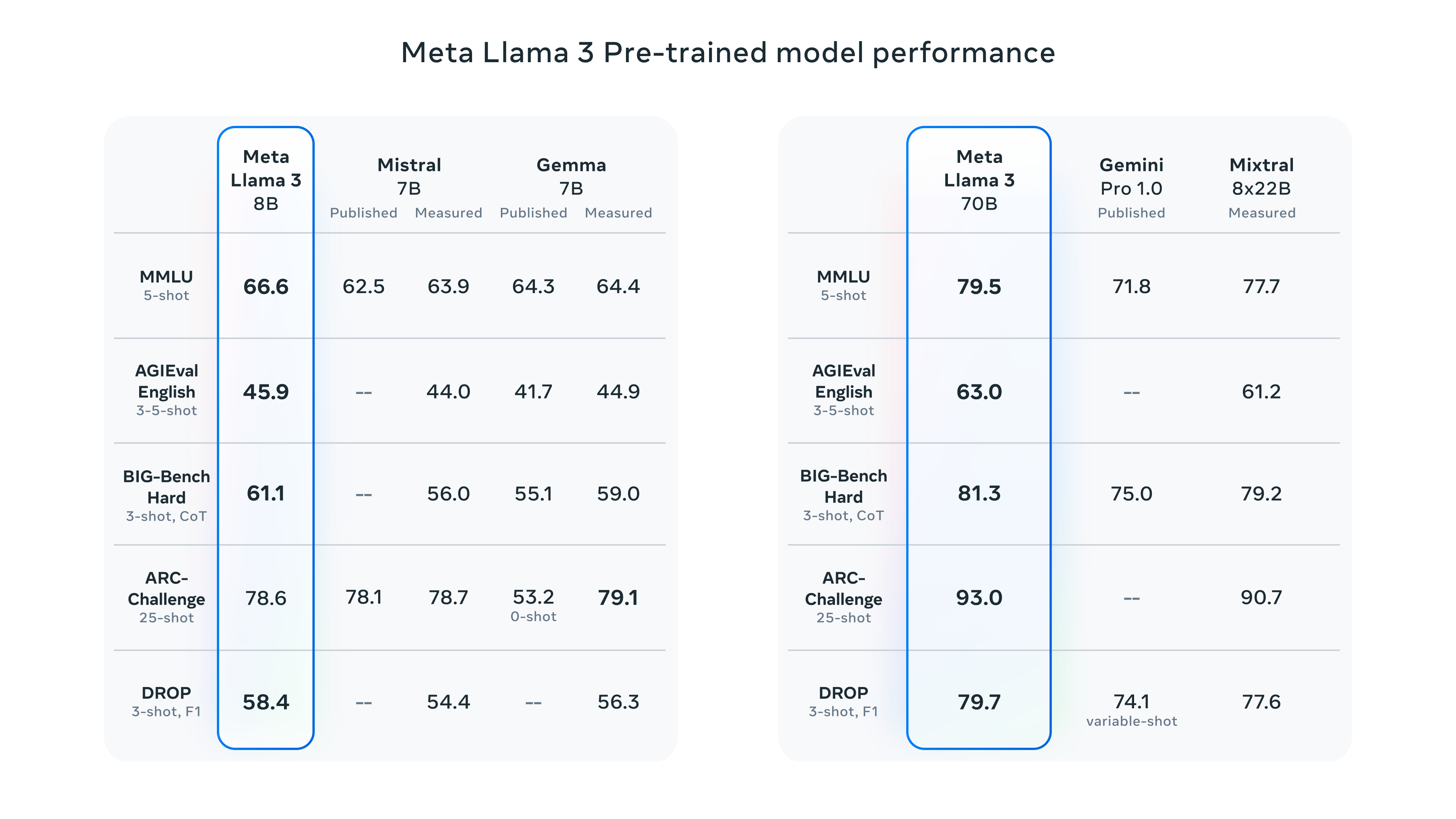

With Llama 3, Meta has significantly advanced the state of LLM technology. Its 8B and 70B parameter models represent a substantial leap over their predecessors, Llama 2, establishing new benchmarks in areas like reasoning, code generation, and instruction-following. Thanks to improvements in pretraining and fine-tuning, these models deliver greater diversity in responses, better alignment with tasks, and improved steerability for a wide range of applications.

Meta’s commitment to openness remains a key driver in the Llama series’ development. By releasing Llama 3 as open-source, Meta aims to foster innovation across the AI community. This open approach allows developers and researchers to access, modify, and build upon the models, facilitating breakthroughs in various fields.

Optimized for Real-World Applications

To ensure Llama 3 performs well in practical settings, Meta introduced a new high-quality human evaluation set, consisting of 1,800 prompts covering 12 critical use cases such as coding, summarization, reasoning, and question-answering. This rigorous evaluation process ensures that the model excels not only in benchmarks but also in real-world scenarios where accurate and nuanced responses are crucial.

The human-preference rankings further emphasize Llama 3’s superiority over competing models of similar scale, demonstrating its potential to deliver high-quality performance in diverse applications.

Innovative Training Approach

The development of Llama 3 involved a meticulous approach to model architecture, data curation, and pretraining. Llama 3 employs a highly efficient decoder-only transformer architecture, enhanced by Grouped Query Attention (GQA) and an improved tokenizer with a 128K token vocabulary. This architecture enables Llama 3 to process language more effectively, ensuring better performance with fewer tokens.

Training Llama 3 on over 15 trillion tokens, Meta sourced its data from publicly available repositories, ensuring a large, high-quality dataset that includes a diverse range of topics, including four times more code data and representation from over 30 languages. This data-driven approach, combined with robust scaling efforts, resulted in models that improve log-linearly even after extensive training, pushing the boundaries of what LLMs can achieve.

Fine-Tuning for Superior Results

Meta also refined its instruction fine-tuning approach for Llama 3, integrating supervised fine-tuning (SFT), rejection sampling, and preference optimization techniques to enhance the model’s ability to follow instructions, reason, and generate code. The result is a model that is more responsive to complex prompts, providing the most accurate and relevant answers to users.

Prioritizing Responsibility in AI

In addition to performance, Meta has made responsible AI development a priority with Llama 3. New trust and safety tools such as Llama Guard 2, Code Shield, and CyberSec Eval 2 have been introduced to safeguard against misuse. These tools offer protection from problematic code generation and potential security risks, enabling developers to use Llama 3 responsibly.

Meta’s Responsible Use Guide (RUG) provides a comprehensive framework for the safe deployment of Llama 3, ensuring that inputs and outputs are appropriately checked and moderated.

Looking Ahead

The release of the 8B and 70B models marks the beginning of Meta’s vision for Llama 3. In the coming months, Meta plans to roll out additional models with multimodal capabilities, longer context windows, and multilingual support, paving the way for even more versatile AI solutions. Meta will also publish a detailed research paper documenting Llama 3’s development.

Llama 3 is now integrated into Meta AI, Meta’s AI assistant, which is available across platforms like Facebook, Instagram, WhatsApp, and Messenger. With Llama 3 technology, users can leverage Meta AI for a range of tasks, from learning and content creation to productivity and connecting with others.

Try Meta Llama 3 Today

Developers and researchers can access Llama 3 via Meta’s platforms and download the models along with the Getting Started Guide, which offers detailed instructions on how to leverage its capabilities. Meta AI can be experienced on social platforms, and multimodal Meta AI will soon be available on Ray-Ban Meta smart glasses.