MENLO PARK — Meta has introduced Llama 3.2, an enhanced version of its generative AI models, offering significant advancements in both vision and lightweight models designed for mobile and edge devices. This latest release marks a major step forward in AI accessibility and functionality, providing developers and businesses with state-of-the-art tools to build more efficient, adaptable, and secure applications. Llama 3.2 includes a range of models, such as the 11B and 90B vision large language models (LLMs), which excel in tasks requiring image reasoning and document-level understanding, and smaller text-only models like the 1B and 3B, specifically designed for on-device use. These smaller models are optimized for tasks like summarization, instruction following, and real-time text processing, allowing AI capabilities to function effectively on mobile and edge platforms without requiring extensive cloud resources.

One of the key highlights of Llama 3.2 is its advanced vision capabilities, which enable multimodal applications by integrating both text and image inputs. The vision models are built to handle tasks such as analyzing charts and graphs, captioning images, and understanding detailed visual contexts. For example, a user could ask the model to analyze a business’s sales performance over the past year using a graph, and Llama 3.2 would quickly provide insights. Similarly, it can assist with geographical or directional queries using maps, offering highly contextualized and intelligent responses. These capabilities make Llama 3.2 a versatile tool for industries that rely heavily on visual data, such as retail, logistics, and healthcare.

Meta has also focused on making Llama 3.2 models more accessible to developers and enterprises by enhancing compatibility with mobile and edge devices. The 1B and 3B models are designed to run locally, which brings two major advantages—reduced latency and enhanced privacy. Since all processing is done on the device, users experience faster response times, and sensitive data never leaves the device, addressing growing concerns about data privacy in AI applications. These models have been optimized to run efficiently on widely-used hardware platforms, including Qualcomm and MediaTek chipsets, ensuring broad adoption across different devices.

In addition to the technical innovations, Meta is taking steps to ensure that Llama 3.2 remains safe and reliable. The introduction of Llama Guard Vision, a safety feature designed to detect and mitigate potentially harmful inputs and outputs related to images and text, helps secure the use of the models in a wide range of environments. This is an expansion of the safety measures introduced in previous versions, now applied to the vision models to safeguard against malicious use cases, such as attempts to misuse image data for inappropriate purposes.

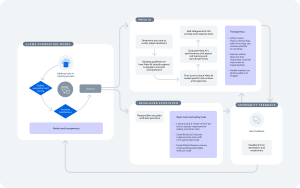

To make development with Llama 3.2 easier, Meta is also introducing Llama Stack distributions, which simplify the deployment of these models across different environments, whether on-premise, cloud, or edge. The Llama Stack API provides developers with a standardized interface to fine-tune Llama models for custom applications, including those that require retrieval-augmented generation (RAG) or other advanced AI functionalities. Meta’s strong partner ecosystem plays a pivotal role in this, with collaborations with leading companies such as AWS, Dell Technologies, and Google Cloud, ensuring that Llama 3.2 is fully integrated into various enterprise solutions from day one. Meta’s partnerships extend across more than 25 global tech firms, demonstrating the broad applicability and growing demand for Llama models in the AI landscape.

The release of Llama 3.2 also underscores Meta’s commitment to openness and collaboration within the AI community. By making its models and tools available to the open-source community, Meta aims to drive innovation, encouraging developers to build on its foundational work. This open-source approach is not just about transparency—it’s about fostering an inclusive environment where developers, researchers, and businesses can collaborate to improve the technology and address any shortcomings, making Llama 3.2 a truly global initiative.

Meta has also focused on expanding the Llama ecosystem with Llama Guard updates and quantized versions of its models for faster deployment. Developers can now access these models via llama.com and Hugging Face, with broad ecosystem support from cloud providers, mobile platforms, and AI-focused companies. Llama 3.2 is poised to reach a wider audience, bringing AI capabilities closer to users and businesses while ensuring that the technology is both powerful and responsibly managed.

Meta’s Llama 3.2 is a significant leap forward in the world of AI, combining cutting-edge vision capabilities with lightweight, efficient models suitable for mobile and edge applications. With the release of this powerful AI suite, Meta reaffirms its belief in open-source collaboration, safety, and innovation, helping to shape the future of AI development for years to come.