MENLO PARK — Meta has reached new milestones in its generative AI research, unveiling two significant advancements: Emu Video and Emu Edit. Authored by Meta, this announcement builds on the company’s earlier successes in generative AI, following their introduction of the Emu model for image generation. At Meta Connect, Emu-powered features were showcased, including AI-driven editing tools for Instagram and the Imagine feature, which allows users to generate photorealistic images directly in chats. Now, Meta is extending its generative AI research further with a focus on controlled image editing and text-to-video generation, both grounded in diffusion models.

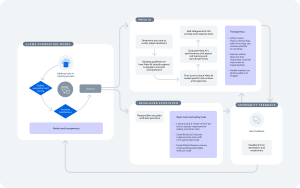

Emu Video introduces a simplified method for high-quality video generation by using a “factorized” approach, which breaks down the process into two steps: generating images from text prompts and then generating videos based on both the text and the image. This innovative technique leverages just two diffusion models to produce 512×512 resolution, four-second videos at 16 frames per second. Compared to prior work like Make-A-Video, Emu Video’s streamlined architecture demonstrates significantly better results, with 96% of respondents preferring its video quality and 85% favoring its accuracy to text prompts. Emu Video can also animate user-provided images with precision, outperforming earlier methods by a wide margin.

Emu Edit, meanwhile, pushes the boundaries of image editing, allowing users to execute highly detailed edits using text-based instructions. Capable of tasks ranging from local and global edits to background removal and geometry transformations, Emu Edit ensures that only the relevant pixels are altered based on the instruction, leaving other areas of the image untouched. For example, when adding text to a specific object in the image, such as placing “Aloha!” on a baseball cap, Emu Edit ensures the rest of the cap remains unchanged.

This precision is enabled by the integration of computer vision tasks into the generative model, a key insight that allows for more controlled image manipulation. Meta trained Emu Edit using an extensive dataset of 10 million samples, making it one of the largest of its kind. The results are unprecedented, with Emu Edit setting new state-of-the-art performance benchmarks for a variety of editing tasks in both qualitative and quantitative evaluations.

Looking ahead, the potential applications of Emu Video and Emu Edit are vast. From animating static images for social media to creating custom GIFs and stickers, these tools could empower everyday users to express themselves creatively with minimal effort. While they are not replacements for professional artists, these technologies open new possibilities for creators, designers, and users alike to enhance their work and communication in imaginative ways.

This breakthrough marks an exciting step forward for generative AI, and Meta’s ongoing research promises to bring more innovations in the future that continue to blur the lines between creativity and AI-driven assistance.