It’s only been a week since Meta Llama 3 was released to the developer community, and the response has been overwhelmingly positive. The release of these initial models is already sparking a new wave of AI innovation across the ecosystem, from applications and developer tools to evaluation improvements and inference optimization. The results have been impressive, with projects that have doubled Llama 3’s context window, as well as advancements in quantization, web navigation, low-precision fine-tuning, and local deployment.

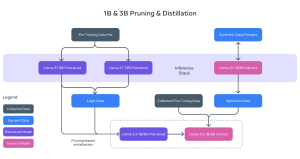

Since its launch just a week ago, the models have been downloaded over 1.2 million times, and more than 600 derivative models have already been shared on Hugging Face. The Llama 3 GitHub repository has surpassed 17,000 stars. In terms of evaluation performance, Llama 3’s 70B Instruct model is tied for first in English-only tests on the LMSYS Chatbot Arena Leaderboard, making it the top-ranked openly available model, trailing only behind closed proprietary competitors.

Our partners in silicon, hardware, and cloud computing have also quickly adopted Llama 3 for their users, with the community fine-tuning the model for specific applications. For example, at Yale School of Medicine, in collaboration with EPFL’s School of Computer and Communication Sciences, Meta Llama 3 was fine-tuned just 24 hours after its release to create Llama-3[8B]-MeditronV1.0, a model specifically designed for medical use. This model is now outperforming other state-of-the-art open models in its class on benchmarks like MedQA and MedMCQA. You can read more about how the teams from Yale and EPFL built Meditron on Llama 2 [here].

As we mentioned during the launch, Llama 3 is just getting started. In the coming months, we’ll introduce models with new features, including multimodality, the ability to converse in multiple languages, extended context windows, and enhanced overall performance. We’re excited to see how the community continues to drive innovation, and we look forward to sharing more updates soon.