MENLO PARK — Meta has unveiled the Video Joint Embedding Predictive Architecture (V-JEPA), a pioneering model designed to push forward advanced machine intelligence (AMI). V-JEPA is a non-generative model that learns through predicting masked or missing sections of video in an abstract representation space, making it a crucial step toward AI that understands and interacts with the physical world more like humans do.

The model builds on Meta’s previous work with Joint Embedding Predictive Architectures (JEPA), expanding its capabilities to handle video content. V-JEPA excels in detecting fine-grained object interactions, improving the efficiency and accuracy of AI models tasked with understanding the real world. By focusing on abstract representations rather than pixel-level details, V-JEPA offers faster and more efficient training compared to traditional models, with up to 6x greater efficiency.

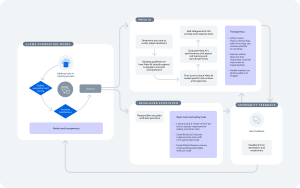

V-JEPA uses a self-supervised learning approach, requiring no labeled data during pre-training. Labels are introduced only to fine-tune the model for specific tasks, making the model highly efficient in adapting to new tasks without the need for full retraining. The model has demonstrated significant improvements in action recognition, object interaction detection, and other video-based tasks, particularly excelling in low-shot scenarios where fewer labeled examples are available.

Advancing AI Perception and Planning

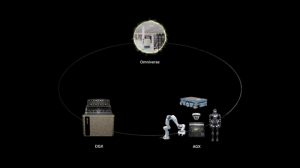

V-JEPA moves AI beyond merely observing pixels, developing a more grounded understanding of the physical environment. Its ability to predict spatial-temporal events across video streams allows it to conceptualize scenes and anticipate actions. This is a step toward building “world models”—an AI’s internal understanding of its surroundings—bringing us closer to Meta’s long-term vision of achieving general machine intelligence.

While current applications of V-JEPA focus on short-term perception and fine-grained interactions, future advancements will aim at enabling the model to handle long-term planning and sequential decision-making, key steps in building truly autonomous AI systems.

A Commitment to Open Science

Meta is releasing V-JEPA under a Creative Commons NonCommercial (CC BY-NC) license to encourage responsible research and collaboration within the AI community. Yann LeCun, Meta’s Chief AI Scientist, emphasized the importance of this release, stating, “V-JEPA is a crucial step toward more generalized reasoning and planning in AI. We want to build systems that learn like humans, by forming internal models of the world, and we are excited to share this work openly to accelerate progress in the field.”