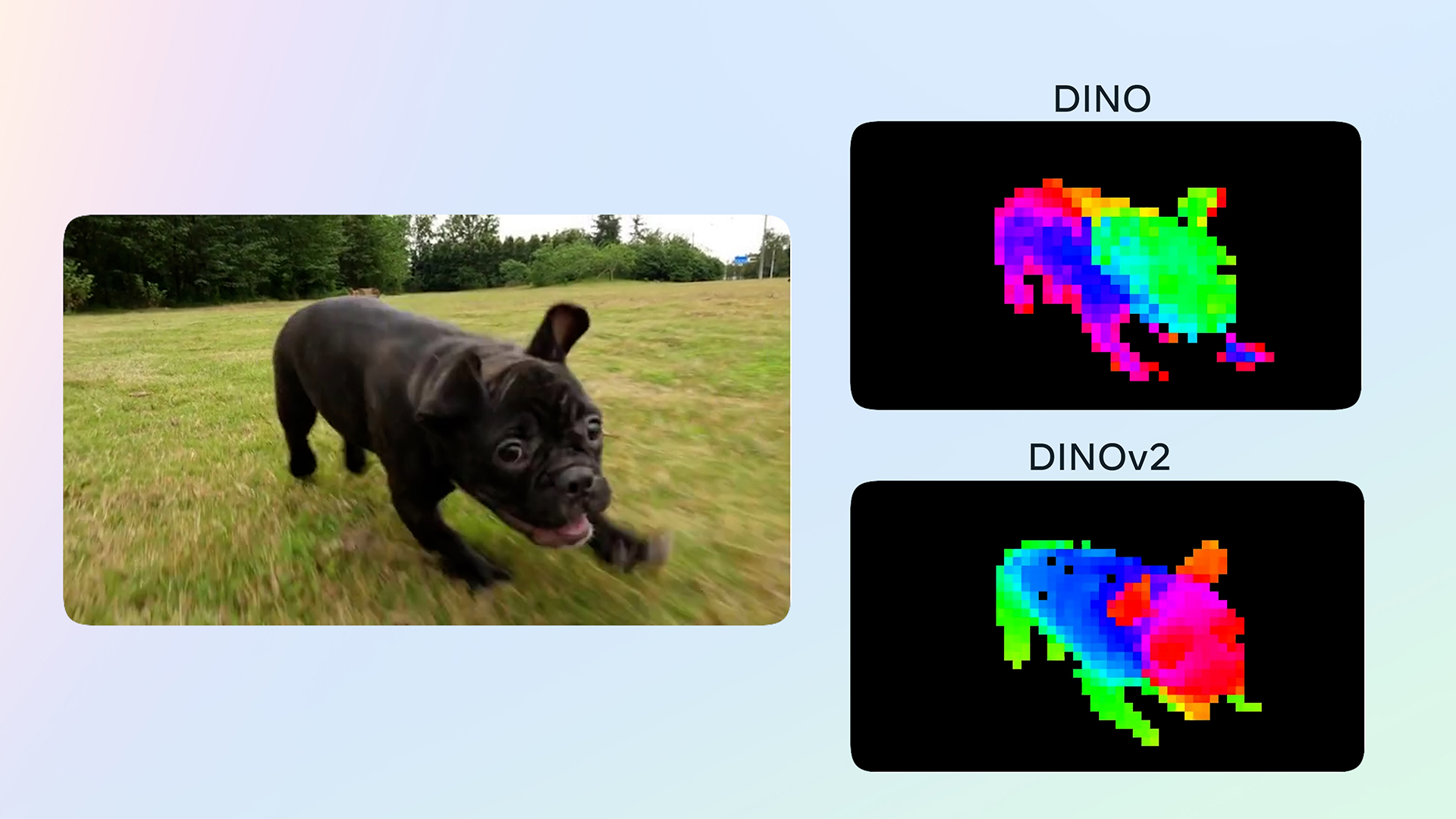

MENLO PARK — Meta AI has unveiled DINOv2, a next-generation self-supervised model designed to advance computer vision capabilities across various tasks without requiring labeled data. Unlike previous models that rely on text-image annotations, DINOv2 excels by learning directly from raw images, enhancing flexibility and effectiveness in tasks such as classification, depth estimation, and segmentation. This release of DINOv2 marks a breakthrough in self-supervised learning, enabling a high level of generalization and performance on tasks traditionally requiring fine-tuning.

In partnership with the World Resources Institute, DINOv2 has already demonstrated real-world application, such as mapping forests accurately from large-scale image data. DINOv2’s backbone, based on a straightforward and scalable architecture, allows it to perform reliably across different domains and datasets without losing accuracy. Its ability to accurately capture fine details and localize image parts offers significant improvements over models trained on text-image data, which may overlook contextual elements.

For a broader reach, Meta AI is releasing DINOv2’s models and pre-training code, allowing the community to explore, adapt, and innovate further. Capable of producing high-quality visual embeddings, DINOv2’s pretrained models can be applied directly to various computer vision challenges with minimal setup, establishing a new standard for computer vision research and applications without needing extensive labeled datasets.

With this launch, Meta AI emphasizes the expanding role of self-supervised learning as a cornerstone in visual AI development, paving the way for new use cases and integrated AI systems that can leverage DINOv2’s robust image analysis as a key component.