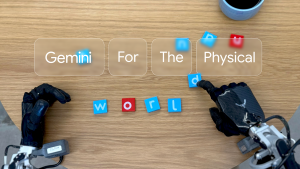

LONDON — Google DeepMind has announced a significant advancement within its Gemini model lineup, unveiling the streamlined 1.5 Flash model designed for enhanced speed and efficiency, alongside Project Astra, which envisions the next evolution in AI assistants.

At the core of Project Astra is a two-part demo that demonstrates its real-time processing and adaptability, showcasing how AI assistants might operate in the near future. Captured in single takes, the demo highlights how these prototype agents can swiftly process information by encoding video frames in real time, synchronizing video and speech inputs into a cohesive timeline, and caching details for efficient recall.

Through leveraging DeepMind’s leading-edge speech technology, these agents not only interpret and process spoken information quickly but also generate responses with a dynamic range of intonations, bringing a conversational depth previously unseen in AI. By enhancing context awareness, these agents promise a more fluid and responsive experience for users.

With these advancements, the prospect of having expert AI assistants embedded in everyday devices—such as phones or smart glasses—moves closer to reality. Select Gemini-powered features are expected to be integrated into Google products, including the Gemini app and web experience, by year-end.

Google DeepMind’s commitment to pioneering developments in AI continues to push the boundaries of what’s possible with the Gemini model family. This steadfast investment in innovative research and technology opens doors to promising new applications and potential within the realm of AI.

Learn more about Gemini and its capabilities.