MOUNTAIN VIEW — The creators of the Gemma family of open models marked a significant milestone with the launch of Gemma 3—a new suite of lightweight, cutting-edge AI models. Celebrating Gemma’s first birthday with over 100 million downloads and more than 60,000 community-created variants, this release reinforces their commitment to democratizing AI technology.

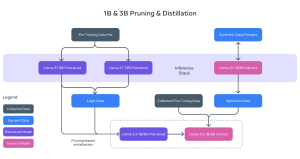

Built on the same cutting-edge research powering Gemini 2.0, Gemma 3 is designed for speed, portability, and responsible development. Offered in sizes ranging from 1B to 27B parameters, these models are tailored to meet diverse hardware and performance needs, whether running on smartphones, laptops, or high-powered workstations. Developers can leverage Gemma 3’s impressive capabilities, which include support for over 35 languages out-of-the-box and pretrained capabilities for more than 140 languages, ensuring truly global reach.

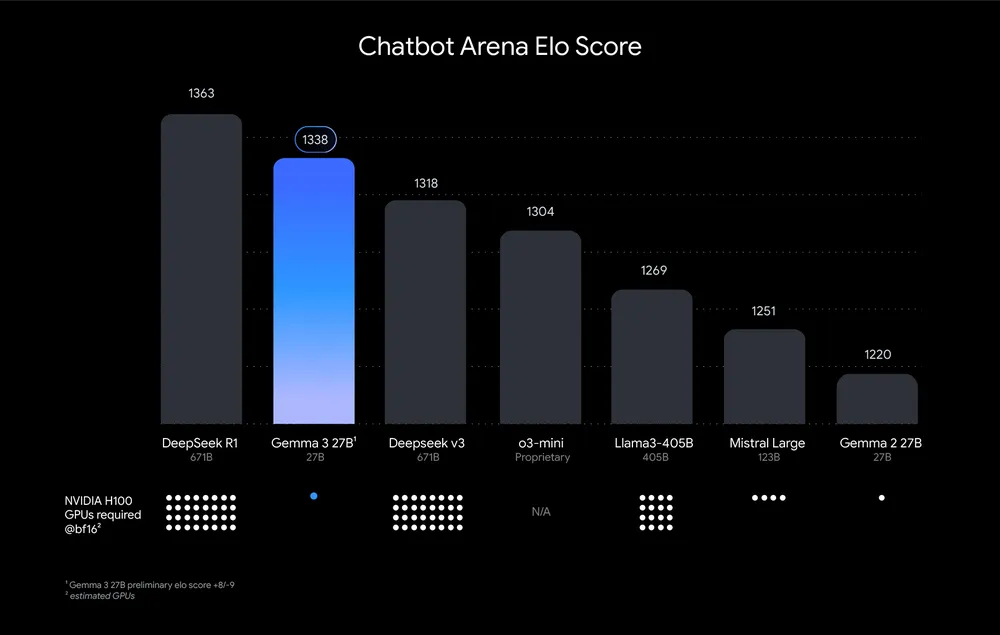

Gemma 3 delivers state-of-the-art performance, outperforming competitors in preliminary human preference evaluations. Its advanced text and visual reasoning abilities, expanded 128k-token context window, and support for function calling empower developers to build AI applications that can process complex tasks and large amounts of information. Additionally, the introduction of official quantized models helps reduce computational requirements while maintaining high accuracy, making Gemma 3 one of the most efficient tools available on a single GPU or TPU host.

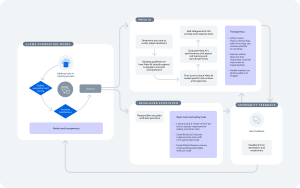

Safety remains a top priority in the development of Gemma 3. The team implemented rigorous safety protocols, extensive data governance, and targeted evaluations to minimize risks associated with misuse—especially concerning STEM applications. In parallel, ShieldGemma 2, a robust 4B image safety checker built on the Gemma 3 foundation, offers customizable safeguards by providing safety labels across dangerous content, sexually explicit material, and violence.

Gemma 3 and ShieldGemma 2 integrate effortlessly with popular development tools and platforms, including Hugging Face Transformers, PyTorch, and Google AI Studio, among others. This seamless integration accelerates the development process, enabling developers to experiment, customize, and deploy AI solutions rapidly. The expansive Gemmaverse continues to grow, inspiring innovations like AI Singapore’s SEA-LION v3, INSAIT’s BgGPT, and Nexa AI’s OmniAudio.

In a bid to further foster academic breakthroughs, the Gemma 3 Academic Program has been launched, offering researchers Google Cloud credits worth $10,000 per award. This initiative underscores the commitment to support innovative research and expand the potential of Gemma-powered AI applications across industries.

As Gemma 3 paves the way for more advanced and accessible AI development, it reinforces the vision of a future where high-quality AI technology is at everyone’s fingertips—empowering creators to build transformative applications wherever they are needed.

In the context of AI, what’s the radical shift here?

The radical shift is the move towards lightweight, portable AI models that run efficiently on everyday devices, rather than relying solely on massive, resource-intensive systems. This approach democratizes access to advanced AI capabilities by enabling high-performance, real-time processing across a broad range of hardware, while integrating advanced features like extensive language support, function calling, and robust safety measures.