SAN FRANCISCO — AI safety and research company Anthropic has launched Claude 3.7 Sonnet, a new AI model designed to tackle more complex tasks with a feature called “extended thinking mode.” By letting users toggle deeper or faster reasoning, Anthropic says this update fundamentally changes how AI can be applied to both intricate challenges—like solving advanced mathematics or debugging code—and simpler inquiries like checking the day’s date.

Extended Thinking, Now with a “Thinking Budget”

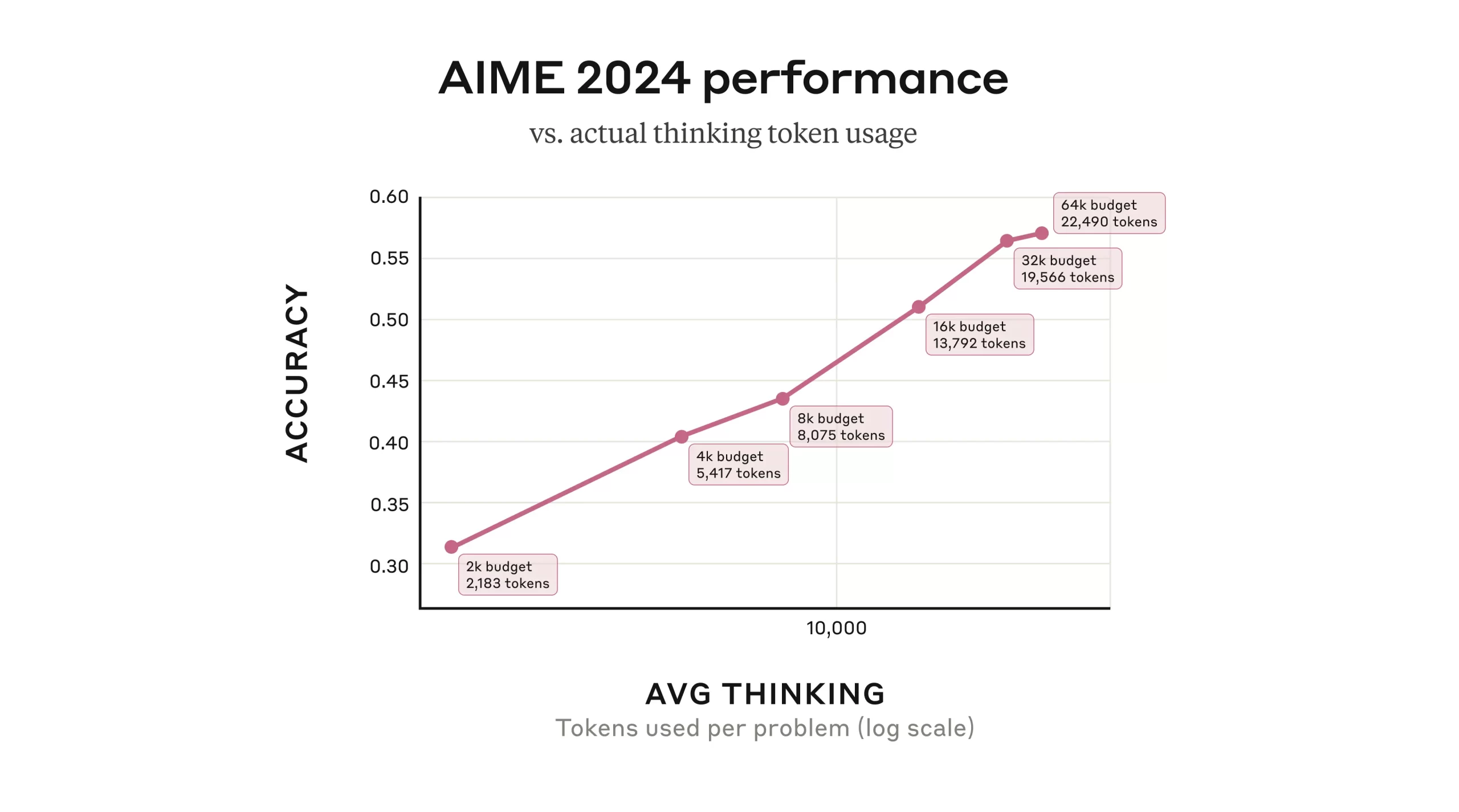

The most prominent change in Claude 3.7 Sonnet is the ability to devote additional mental “effort” to difficult questions. Users can turn on extended thinking mode to allow Claude to spend more time generating an answer, or they can set a “thinking budget” to limit how many steps it takes before responding. Unlike a separate model or engine, this new approach simply instructs Claude to explore reasoning paths more deeply, improving accuracy and complexity in its answers.

A Visible Thought Process

In a move intended to increase user trust, Anthropic has made Claude’s raw thought process visible. This lets users see the AI’s intermediate reasoning as it arrives at a final answer, offering clearer insight into how the model breaks down problems. According to Anthropic’s alignment researchers, the ability to watch Claude’s “train of thought” can boost confidence and help detect inconsistencies or potential misalignments—though the company emphasizes it does not guarantee “faithfulness,” as the model’s hidden processes may still differ from its visible thoughts.

Action Scaling and “Agent” Abilities

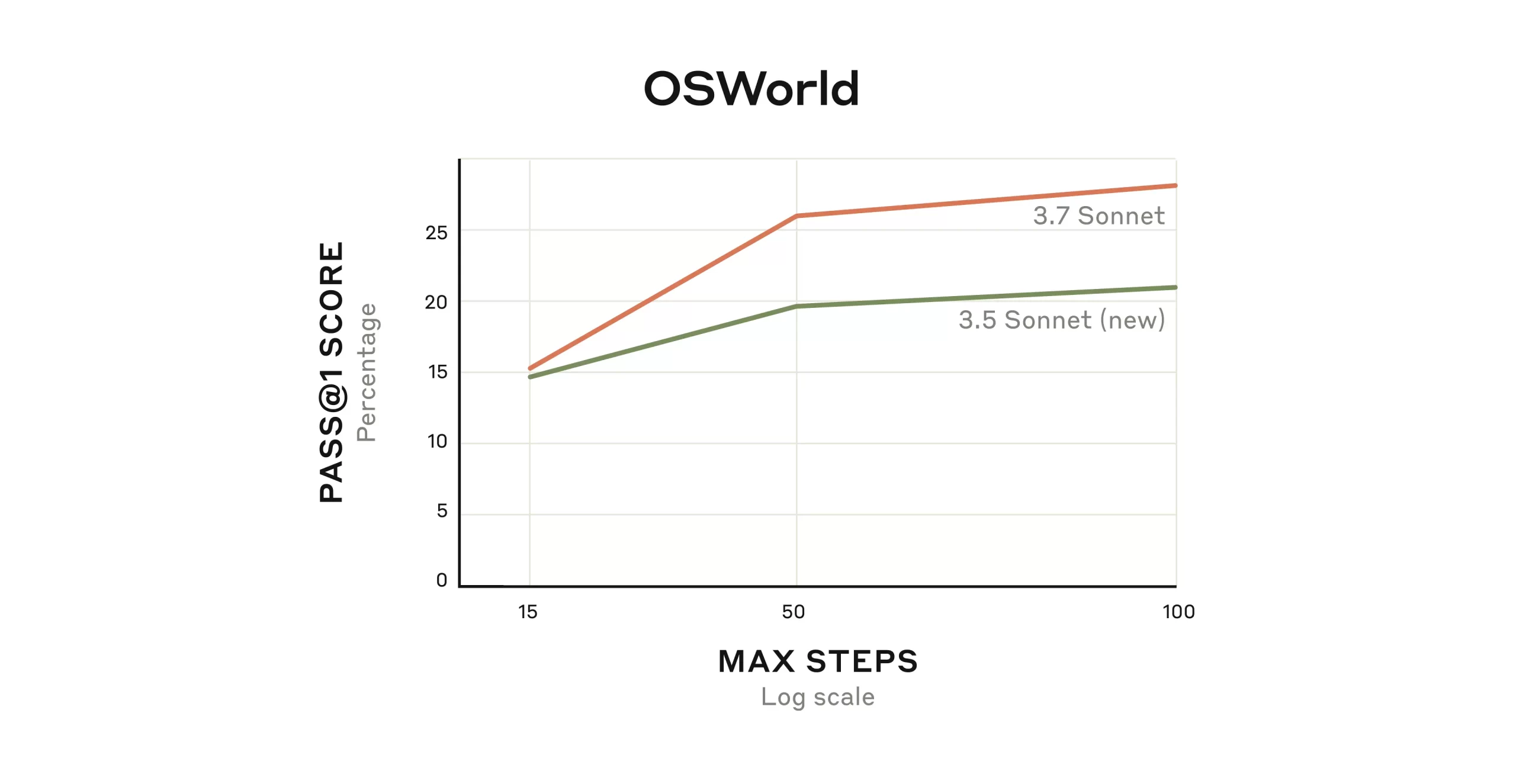

Claude 3.7 Sonnet also introduces upgraded “agentic” features, allowing the model to perform iterative tasks like navigating a computer environment or engaging with complex user interfaces. In Anthropic’s OSWorld benchmark—an evaluation of AI’s multimodal skills—Claude 3.7 Sonnet improved its results over previous versions by handling more steps and using more computational power when needed.

One of the most eye-catching demonstrations is Claude’s ability to play classic Pokémon Red. Whereas prior versions of Claude got stuck early on, Claude 3.7 Sonnet leveraged its extended thinking and agent training to move through the game’s challenges, defeating three Gym Leaders. While playing Pokémon may be niche, Anthropic says the same long-context, open-ended approach can bring real-world advantages to tasks like data analysis, user support, and continuous process automation.

Parallel and Serial Compute

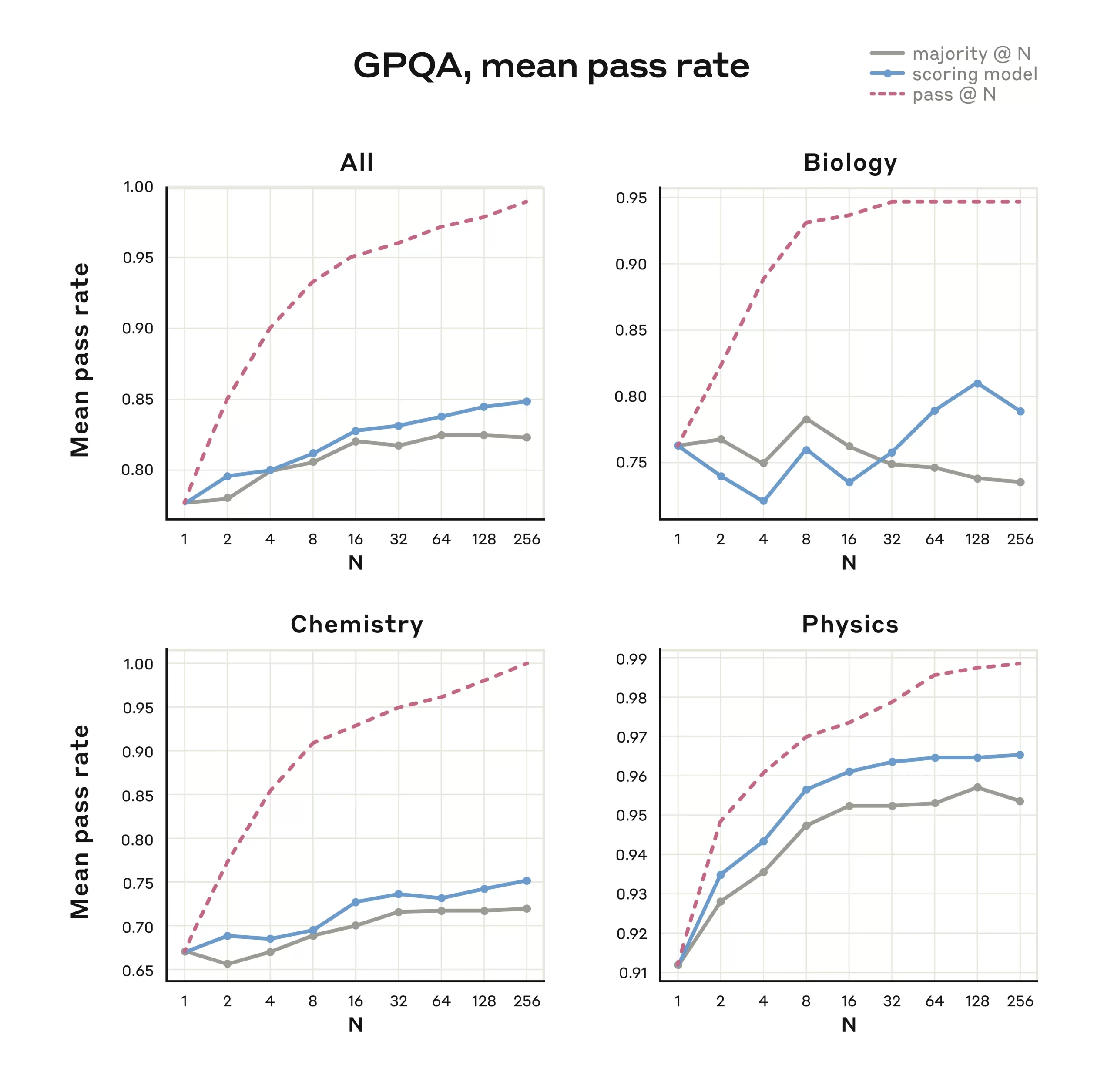

Anthropic’s research also highlights “serial test-time compute,” where Claude extends its reasoning steps before producing an output, and “parallel test-time compute,” where multiple thought processes run at once. In internal testing on GPQA—a set of tough science questions—running many parallel samples and using a learned scoring system significantly boosted accuracy. While parallel compute isn’t yet publicly available in the new release, Anthropic notes it could become a powerful method for future enhancements.

Safety and Alignment Measures

Despite its improved performance, Claude 3.7 Sonnet remains at Anthropic’s ASL-2 “Frontier Model” safety standard. Comprehensive red-teaming showed that while the model is more sophisticated, it still hit dead ends when tested on illicit tasks (e.g., creating dangerous weapons) and did not fully achieve them. Anthropic also introduced new security measures for Claude’s ability to view and interact with a user’s computer, making it more resistant to “prompt injection” attacks.

Where extended thinking is visible, certain content deemed potentially harmful is encrypted rather than displayed—ensuring Claude’s open-ended reasoning can still happen without exposing sensitive or dangerous ideas to the user. Anthropic says it may re-evaluate whether to keep the thought process fully visible in subsequent releases, especially as AI capabilities progress.

Availability and Looking Ahead

Claude 3.7 Sonnet is accessible now via Claude.ai and Anthropic’s API for Pro, Team, Enterprise, and qualified developers. The company has published a full System Card detailing safety features, alignment efforts, and further experiments demonstrating Claude’s agentic and extended reasoning abilities.

For Anthropic, the launch of Claude 3.7 Sonnet is a showcase of how large language models might one day approach near-human flexibility—capable of quick answers when needed, yet able to dive deeply into more formidable tasks, all while giving a window into the reasoning behind the machine.

Why this announcement is important for the AI industry?

Anthropic’s Claude 3.7 Sonnet announcement is significant because it highlights greater transparency and controllability in AI systems—two critical goals for the industry as it tackles challenges like hallucination, alignment, and user trust. By enabling deeper or quicker “modes of thought” and letting users see how the AI reaches its conclusions, developers gain more direct oversight of the model’s reasoning. This represents a move toward safer, more accountable AI, potentially setting a new standard for how AI systems are tested, deployed, and trusted in real-world settings.

Interested users and researchers are encouraged to share their feedback at feedback@anthropic.com.