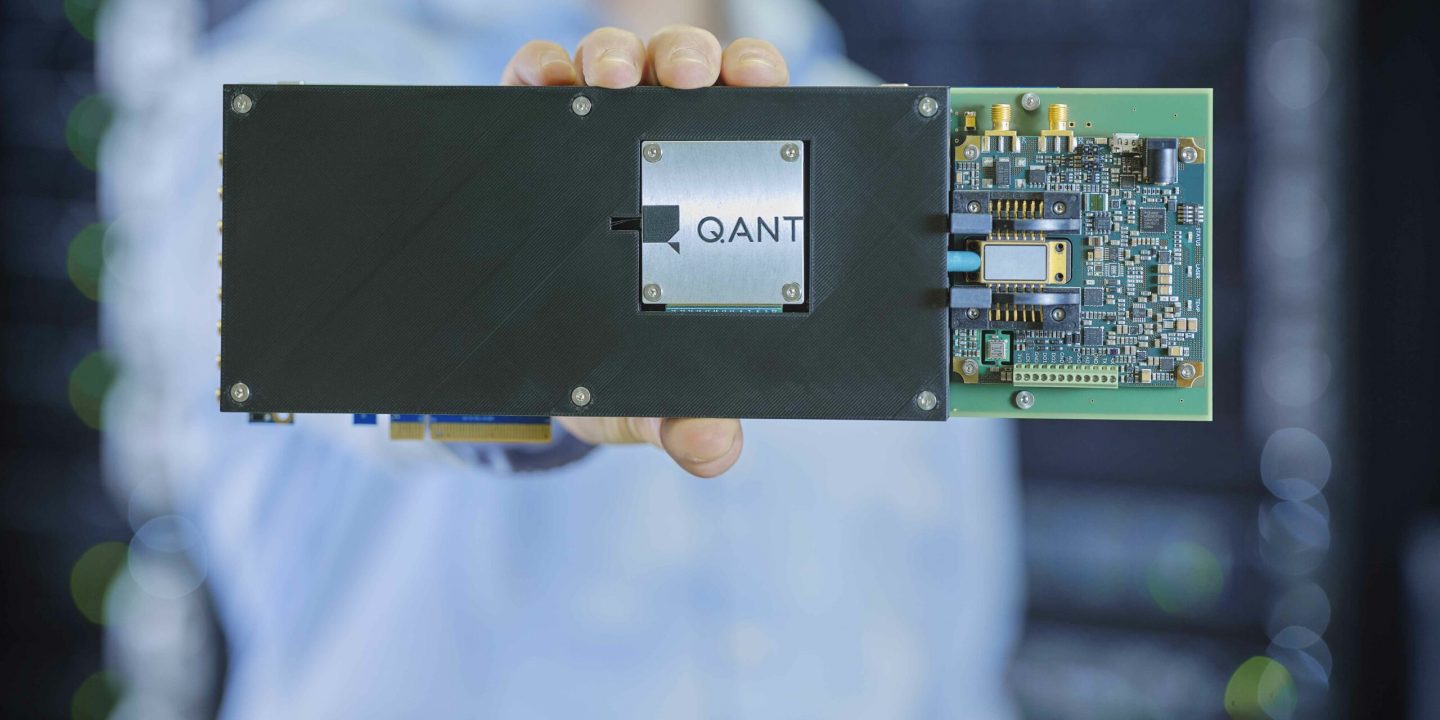

STUTTGART — Q.ANT has launched its first Native Processing Unit (NPU), marking a significant step in the evolution of photonic computing. Designed for high-performance applications such as AI Inference, machine learning, and physics simulation, the NPU utilizes the company’s LENA (Light Empowered Native Arithmetics) architecture, offering groundbreaking improvements in energy efficiency and computational performance. The NPU, based on Thin-Film Lithium Niobate (TFLN) on Insulator chips, is fully compatible with existing computing ecosystems via the industry-standard PCI-Express interface. This technology promises at least 30 times better energy efficiency and a substantial boost in speed over traditional CMOS technology.

Q.ANT’s photonic chips are engineered to perform complex, non-linear mathematical operations using light, providing a sustainable alternative to traditional electronic-based computing. Dr. Michael Förtsch, CEO of Q.ANT, highlighted that photonics can significantly reduce the energy consumption of processes like GPT-4 queries, which are currently 10 times more energy-intensive than standard internet searches. This breakthrough could reduce that energy consumption by a factor of 30, making photonic computing a key enabler for more sustainable AI applications.

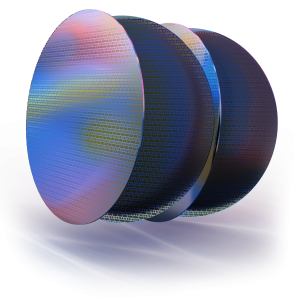

The Q.ANT NPU relies on the company’s proprietary LENA platform, which has been under development since 2018. By leveraging its control over the entire manufacturing process—from wafer to finished processor—Q.ANT achieves far superior performance than CMOS chips. For example, a Fourier transform that would require millions of transistors in traditional systems can be completed with a single optical element in the Q.ANT NPU.

Eric Mounier, Chief Analyst at Yole Group, praised Q.ANT’s novel approach, noting its potential to address the growing energy demands of the AI industry. The NPU’s efficiency is expected to bring immediate benefits in AI inference and training, particularly for large-scale language models and other machine learning applications. Test runs on various datasets, such as MNIST, have shown that the Q.ANT NPU achieves comparable accuracy to linear networks but with significantly lower power consumption.

The Q.ANT NPU also excels in simulations, reducing parameters and operations required for machine learning tasks. In image recognition, it demonstrates superior training speed and accuracy, outperforming conventional approaches even with fewer parameters. The NPU is also poised to advance physics simulations, solve time-series analysis more efficiently, and handle graph problems faster, thanks to its use of light for mathematical computations.

The Q.ANT NPU is now available for pre-order and will begin shipping in February 2025. Offered as a turnkey Native Processing Server (NPS), it can easily integrate into existing HPC or data center environments. Developers can access the NPU through the Q.ANT Toolkit, which integrates seamlessly with existing AI software stacks. For more details, pre-orders, or pricing, interested parties can contact Q.ANT directly.