LONDON — On September 26, 2024, Google DeepMind released an addendum in Nature that explores the impact of AlphaChip, an AI-driven method that has transformed computer chip design. Building on their initial 2020 preprint and subsequent publication, this release introduces the AlphaChip model, along with a pre-trained checkpoint and shared model weights. Since its introduction, AlphaChip has reshaped the complex field of chip design, leveraging reinforcement learning to produce layouts that outperform human designs.

AlphaChip’s core breakthrough lies in its ability to rapidly generate optimized chip layouts—a process that traditionally required weeks or months of manual design now takes just a few hours. Its capabilities have been leveraged across three generations of Google’s Tensor Processing Unit (TPU) hardware, significantly enhancing the speed and performance of AI models based on Google’s Transformer architecture. These TPUs, vital to Google’s advanced AI systems, support applications ranging from large language models like Gemini to media-generating tools such as Imagen and Veo. The reach of AlphaChip extends beyond Google, with other industry leaders like MediaTek adopting the method to refine their chip development processes.

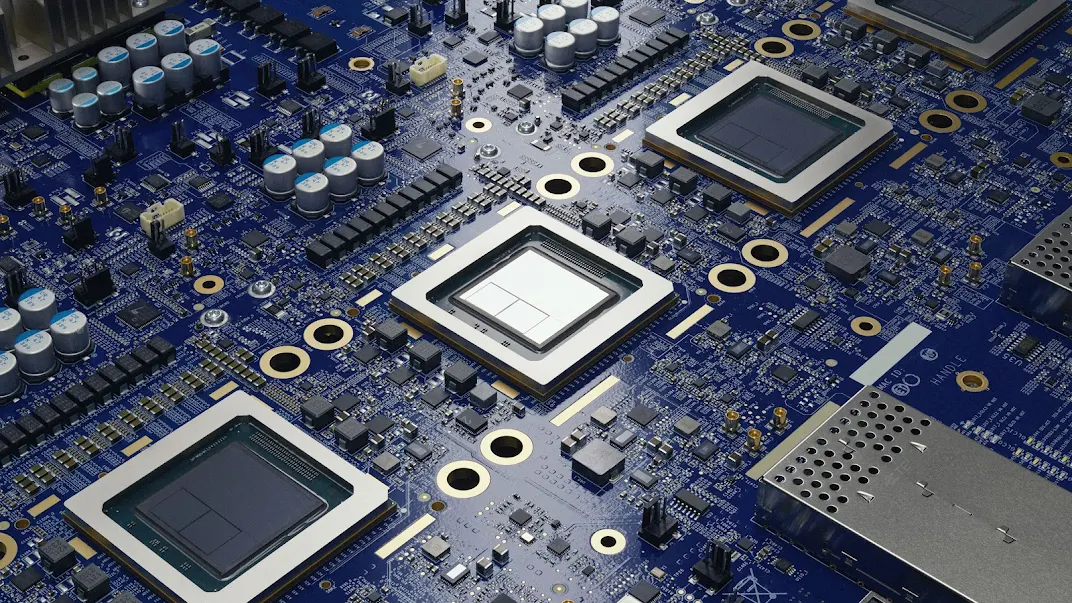

The underlying challenge of chip design involves managing the placement of numerous interconnected components, constrained by complex design requirements. AlphaChip tackles this task by framing chip floorplanning as a game-like challenge, similar to how AlphaGo and AlphaZero mastered games like Go and chess. Using an edge-based graph neural network, AlphaChip learns and adapts to the intricate relationships between chip elements, becoming more proficient with each design it produces. This approach enables AlphaChip to create more efficient layouts that optimize wirelength and meet various design constraints.

Since its deployment, AlphaChip has contributed to the design of TPUs, including the latest sixth-generation Trillium model, achieving enhanced layouts and accelerating the overall design timeline. It first trains on a diverse set of previous-generation chip blocks, such as memory controllers and data transport buffers, before applying its skills to current TPU layouts. This iterative learning process allows AlphaChip to continue improving its performance, similar to the way human experts refine their craft.

Beyond its direct applications within Google, AlphaChip’s influence has spurred broader interest in the research community and the wider chip design industry. Its methods have inspired new research on applying AI to various stages of chip development, from logic synthesis to timing optimization. Notably, Professor Siddharth Garg of NYU Tandon School of Engineering recognized AlphaChip as a catalyst for advancing AI research in chip design, highlighting its impact across different stages of the design process.

Looking ahead, the team behind AlphaChip envisions its potential to reshape the entire chip design cycle, including stages like computer architecture and manufacturing. The future iterations of AlphaChip aim to produce even more efficient and cost-effective chips, offering benefits that could extend to everyday technologies, such as smartphones, medical devices, and agricultural tools. With continued collaboration with the broader community, AlphaChip is poised to drive a new era of innovation in chip design.