MENLO PARK — Meta is advancing its efforts in computer vision technology, releasing new models and tools while ensuring fairness and transparency in AI development. Their latest development, DINOv2, a self-supervised learning-based model for computer vision, is now available under the Apache 2.0 license. This release includes models for semantic image segmentation and depth estimation, expanding the potential for diverse applications. DINOv2’s capabilities aim to push the boundaries of AI innovation, with a focus on openness and collaboration in the computer vision community.

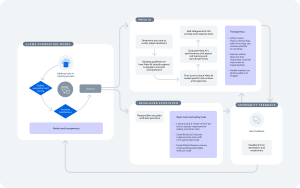

However, Meta also recognizes the importance of fairness in AI systems and has introduced FACET (FAirness in Computer Vision EvaluaTion). FACET is a comprehensive benchmarking tool designed to assess fairness in computer vision models. The dataset includes 32,000 images of 50,000 people annotated for demographic attributes such as gender presentation, age group, and skin tone, as well as person-related classifications like occupation. FACET aims to provide deeper insights into how models perform across diverse groups and mitigate biases in classification, detection, and segmentation tasks.

Preliminary tests with FACET have revealed performance disparities in state-of-the-art models, particularly regarding skin tone and gender presentation. Meta’s goal with FACET is to enable researchers and developers to better evaluate and address fairness concerns in their own models.

Meta continues to emphasize the importance of open source research, allowing the community to contribute to and improve AI models like DINOv2. The open release of these tools reflects Meta’s commitment to responsible AI innovation while driving further progress in computer vision technologies.