MENLO PARK — In a groundbreaking initiative aimed at empowering creators, Meta has introduced Movie Gen, a cutting-edge generative AI platform designed to enhance creativity across various media forms. Whether you’re an aspiring filmmaker or a casual video creator, Movie Gen promises to democratize access to sophisticated tools that can elevate your content. By utilizing simple text prompts, users can generate custom videos, edit existing content, and even transform personal images into unique videos, all while achieving superior performance compared to existing industry models.

Movie Gen represents the next step in Meta’s ongoing commitment to advancing AI research. Following the success of the Make-A-Scene series, which facilitated the creation of image, audio, video, and 3D animations, Meta is now unveiling this third wave of innovation. With the integration of Llama and diffusion models, Movie Gen combines multiple media modalities and offers unprecedented control for users, thereby paving the way for new creative products and experiences.

Importantly, Meta emphasizes that generative AI is not intended to replace human artists and animators but to complement their work. The goal is to empower individuals to express themselves in new ways, providing opportunities for those who may not have had access to such tools before. The aspiration is to one day enable everyone to bring their creative visions to life, producing high-definition videos and audio through Movie Gen.

A Closer Look at Movie Gen’s Capabilities

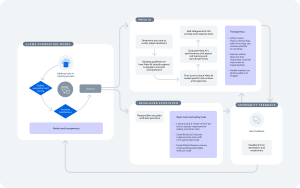

Movie Gen boasts four primary functions: video generation, personalized video creation, precise editing, and audio generation. Each of these capabilities has been developed using a mix of licensed and publicly available datasets, and detailed technical insights will be shared in an accompanying research paper.

- Video Generation: Utilizing a 30B parameter transformer model, Movie Gen can generate high-definition videos up to 16 seconds long at 16 frames per second, skillfully interpreting object motion and camera dynamics to create realistic scenes.

- Personalized Video Creation: By inputting a user’s image along with a text prompt, Movie Gen can generate personalized videos that capture the individual while adding rich visual elements informed by the prompt, achieving impressive results in identity preservation.

- Precise Video Editing: This feature allows users to input both video and text to make targeted edits, such as modifying backgrounds or replacing elements, while retaining the integrity of the original footage—offering a level of precision not typically found in conventional editing tools.

- Audio Generation: A 13B parameter audio model enables the production of high-quality audio to complement video content, generating sound effects, background music, and more, all synchronized with the visuals.

Looking Forward

Meta acknowledges the potential of these foundation models while recognizing existing limitations, such as the need for further optimizations to improve inference time and model quality. As the team works toward future releases, they are committed to collaborating with filmmakers and creators to incorporate feedback, ensuring the tools are tailored to enhance creative expression.

With an eye on the future, Meta envisions a landscape where users can easily animate personal stories, create custom greetings, and engage in limitless creative endeavors, ultimately revolutionizing the way content is produced and shared.