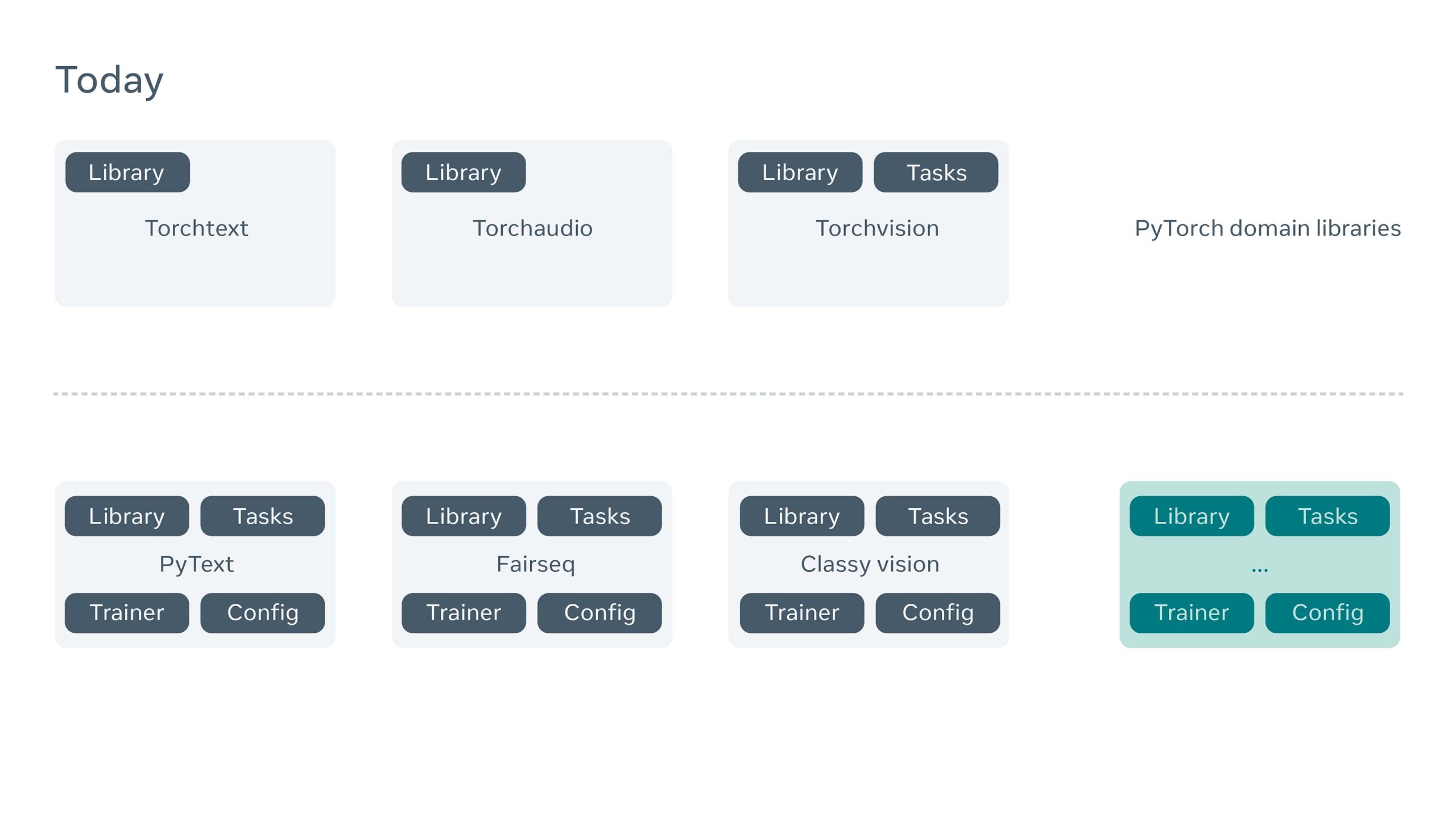

MENLO PARK — Artificial intelligence (AI) plays a crucial role at Facebook, powering everything from intelligent shopping recommendations and harmful content detection to text translation and automated caption generation. To achieve this, Facebook AI has developed several deep learning platforms, such as ClassyVision, Fairseq, and PyText, which allow for seamless model training and deployment. However, these platforms tend to rely on custom abstractions and tight coupling between components, limiting their flexibility for other projects and making it harder for researchers and advanced users to extend them to new use cases.

To address these challenges, Facebook AI is reengineering its platforms to make them more modular and interoperable. By breaking down components into standalone libraries and leveraging Facebook’s open-source Hydra framework for handling configurations, Facebook AI aims to increase the flexibility of these platforms while maintaining backward compatibility for end-to-end training. Additionally, the platforms will integrate with PyTorch Lightning, an open-source Python library that enhances modularity and manages training loops. This move is intended to standardize AI development across communities and encourage collaboration between different research fields.

Historically, content understanding in AI has been divided between computer vision and natural language processing (NLP), with each field developing its own specialized tools. However, deep learning has allowed for increased collaboration between these fields, making it easier to apply ideas from one area to another. For example, concepts from NLP, such as Transformer layers, are now being successfully applied to computer vision challenges. Facebook AI’s goal is to create standardized, interchangeable building blocks across AI subfields to accelerate progress and make AI more accessible to developers worldwide.

As part of this reengineering, Facebook AI is modularizing its platforms, separating them into libraries and multiple entry points. This allows users to continue working with configuration files while also giving power users more control and flexibility to integrate other libraries into their projects. By collaborating with external tools like PyTorch Lightning and Hydra, Facebook AI is laying the groundwork for more modular, scalable AI systems that can be used across different AI domains.

PyTorch Lightning separates the scientific components from the engineering aspects of deep learning projects, making it easier to standardize and automate tasks. With the release of PyTorch Lightning version 1.0, Facebook AI is adding compatibility to its platforms, incorporating useful abstractions like Callbacks, DataModules, and LightningModules. Meanwhile, the Hydra framework helps users manage complex configurations and supports launching to different clusters without modifying application code.

The ultimate goal of this reengineering effort is to foster more collaboration across AI subfields, share innovations more easily, and create a more unified approach to AI development. By standardizing tools and making them more flexible, Facebook AI is positioning itself at the forefront of the AI community’s efforts to build the next generation of intelligent systems.